Subscribe to our newsletter

Letting Out Steam: Reproducibility Problems

During the first week of December, a series of seminars and events were held in London that made up STM week. While attendance may have been a little down from previous years for the innovations seminar that I was a part of, there seems to be an increasing number of events and dinners surrounding the week, including Digital Science’s Shaking It Up Event.

The first part of the STM innovations seminar focused on the problems of reproducibility in science. For some years now, there have been voices of concern noting that when previously reported results are tested, the data very often doesn’t come out the same way. During the seminar, Andrew Hufton of Scientific Data went so far as to state that progress in the pharmaceutical sciences is being held back by lack of reliability in the basic literature. There have been a number of causes suggested for this apparent lack of reproducibility ranging from: p-hacking – where data sets are repeatedly analysed under different conditions, grouping and ungrouping variables until a significant result is manifested, to the so-called file drawer problem – where negative results that might argue against a hypothesis are filed away and never published.

The presence of too many unreproducible results in the basic literature is a difficult one to approach. One problem was highlighted by Kent Anderson, publisher of Science magazine at AAAS. Anderson made the valuable point that sometimes research is unreproducible because experiments are difficult; just because the second dataset disagrees with the first, doesn’t mean that the first is wrong. He also noted that experimental error is often unintended and often not material to the conclusions. There is undoubtedly validity to this and there is a risk that good work may get caught up in some manner of scientific fraud witch hunt.

My fear is that we run the risk of getting into a false dichotomy of asking whether the scientific record and method are just fine the way they are, or whether huge numbers of scientists are engaging in massive and deliberate fraud. It’s entirely possible that we have a serious problem with reproducibility requiring decisive action, and that everybody involved in the scientific effort has acted in good faith. Certainly, in my experience of entering biology from a quantitative background, I saw researchers regularly repeating underpowered experiments until a simple significance test came out positive, and declaring that to be the only experiment that worked. Typically, they didn’t have any idea that they were doing anything wrong and when challenged, would honestly state that experiments don’t work all the time because science can be messy. We were working at the edges of what could be measured, after all. Perhaps that is often true, but by the same token, if that positive result could as easily be a false positive as a real effect that is hard to see, the experimenter may as well be tossing a coin.

If the problems were as simple as a lack of researcher understanding of proper stats and experimental design, it could be addressed easily through education and raising the bar at the editorial and peer-review level, but there’s a more insidious mechanism at work.

The problem that I see is a result of the intense pressure that researchers are now under to generate positive, high impact results in order to advance their careers. The increasingly steep career funnel from post-doc to tenure means that to researchers can easily get overly invested in the success of an experiment. Under those conditions, it is impossible for an investigator to remain dispassionate and the experimenter effect inevitably becomes amplified. It becomes too easy for a researcher to convince themselves that the experiment should come out the way their hypothesis says, and so they repeat it until it ‘works’. When a graduate student desperately needs a publication to get into the post-doc program that they feel their life depends on, it’s impossible to not let that influence the choice of a microscope field of view that they are trying to choose at random, when quantifying the difference between one obviously diseased brain sample and an obviously healthy one. These effects happen irrespective of the researcher trying their best. They’re doing nothing dishonestly, or even deliberately, they’re just human beings with the same unconscious biases as the rest of us.

This might all sound a bit depressing, but there is light at the end of the tunnel. There’s a movement towards experimental pre-registration, where researchers state their hypothesis and methods before taking data. Some journals are appearing that accept articles based on methods and preliminary data before the experiments are conducted, irrespective of whether the final result comes out positive. This is an interesting way to try to prevent publication bias and the file drawer problem, but critics worry that it will discourage exploratory science. There are also efforts, particularly in psychology to replicate experiments as a vital third-party cross-check.

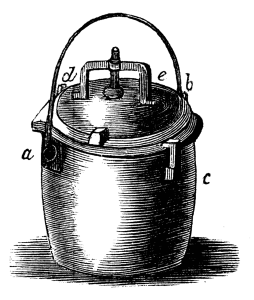

If we’re going to solve this problem fully, we have to stop thinking about erroneous results as necessarily the result of fraud. We need to be honest with ourselves about what it’s like to be a real person doing science today, how science is done in practice, and the kinds of psychological pressure we put researchers under. The movements towards pre-registration and the replication projects are helpful, but they’ll only go so far if we don’t mitigate the underlying driving forces. We’ve turned science into a pressure cooker and if we don’t let out a little steam, the reproducibility problems are likely to get worse.