Subscribe to our newsletter

Relative Citation Ratio (RCR) – A Leap Forward in Research Metrics

There is no perfect metric. There is no number or score which fully encapsulates the value, impact, or importance of a piece of research.

While this statement might appear obvious, research evaluation and measurement are a fact of life for the scientific research community. The administrative work in faculty recruitment, promotion, and tenure are coupled with activity reporting and institutional benchmarking – and these measures are increasingly central to a Research Office’s existence. The two most commonly used metrics used to evaluate research output are focused on funding and publications.

Funding is central to many disciplines: it is a fairly simple measure – do you have it, and how much? How do you compare with your peers? What percentage of your salary have you managed to cover through grants? If you have lab space at your institution, what is your grant-generated-dollars-per-square-foot-of-lab-space ratio?

“historical highlights for achievement were associated with volume (how much are you publishing), prestige (which journals published your work), and citations (who is referencing your work.)”

But publications presents many more nuanced challenges. In particular, the historical highlights for achievement were associated with volume (how much are you publishing), prestige (which journals published your work), and citations (who is referencing your work.) These three measures all have cautionary tales of note. While volume of publications are important, associated factors about the area of science and the use of co-authorship and collaboration may all be considered. But here, the number of publications is relatively straightforward – similar to funding disciplines. But quality measures like, “where you are publishing,”, “who is citing your work,” present unique challenges.

“Where have you published?”:

- “Are you publishing in high-quality journals?” is often confused with “are you articles having a high impact”?

- Journals are most commonly rated/ranked based on Journal Impact Factor (JIF)

- While JIF is an interesting measure of the quality of an overall journal, it tells us little about the influence of an individual article

- Research has shown that many papers considered to be “breakthroughs” in the field are published in journals with modest or moderate JIFs

- If you use journal metrics to appraise an article, how do you distinguish a great paper in a mid-tier journal versus a mediocre paper which was accepted by a top-tier journal?

“How often are you cited?”

- Many variables also make it challenging to compare results.

- Some fields, like biology, tend to cite heavily (25+ on average).

- Other fields, like physics cite much less (under 10 on average).

- Therefore a paper with nine citations in physics might be outperforming a biology paper with 18 when the number of citations inside the field is taken into account.

- Citations vary by year – the longer a paper has been in existence, the longer it has had a chance to be cited.

- A 2014 paper with five citations might be preferable to a similar 2005 publication with 6 citations. The 2005 publication might have more citations, but it has only gained one more citation in the additional nine years.

- So how do you compare different papers, published at different times, and across different fields – when you are a Research Dean or Provost at a top institution?

A group at the National Institutes of Health (NIH), in the United States has been working on the answer. Building on new approaches in the field of bibliometrics, the Office of Portfolio Analysis at NIH lead by team members Bruce Ian Hutchins, Xin Yuan, James M Anderson, and George M Santangelo have helped to develop and present the Relative Citation Ratio (RCR.) The RCR works to help normalize a paper to its field(s) and the year it was published, so that you can do something close to facilitating an, “apples to oranges” comparison of different papers in different fields and at different times. The RCR was first described in a paper posted on bioRxiv. You can experience the RCR for any list of articles with PubMed IDs on iCite, a tool that the NIH launched to enable individual researchers to evaluate the results of their articles.

The way that the RCR achieves this is by dividing a paper’s actual citation count by an expected citation count giving you the kind of observed-over-expected ratio with which those having worked on quality-improvement initiatives will be comfortable. The RCR presents as a decimal number: a ‘1’ indicates that it’s performing as expected. Higher than 1 is better ratio – in the case of the RCR, the article has gotten more citations than its peers in year and subject areas.

How is the RCR calculated?

Earlier attempts at taking into account the field of an article usually took the subject area of the article’s journal, and calculated a relative citation rate against all matching articles, eg ‘medicine’. While this is a reasonable approach for very well-defined journals with discrete subject areas, it fails to provide a reliable metric for articles that draw on different subject areas, or are of interest to people outside a narrowly defined topic.

“Instead of solely relying on the publishing journal’s subject area, the RCR takes into account all the articles that are cited with it.”

Instead of solely relying on the publishing journal’s subject area, the RCR takes into account all the articles that are cited with it. This provides for a much more nuanced view of the subject area of the article as the average reflects a more diverse blend of citations behavior.

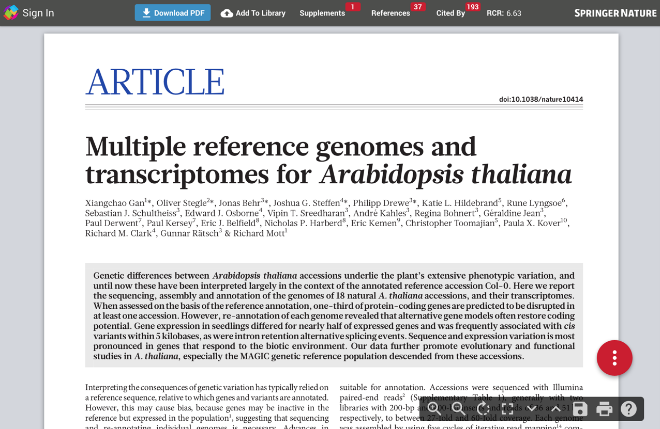

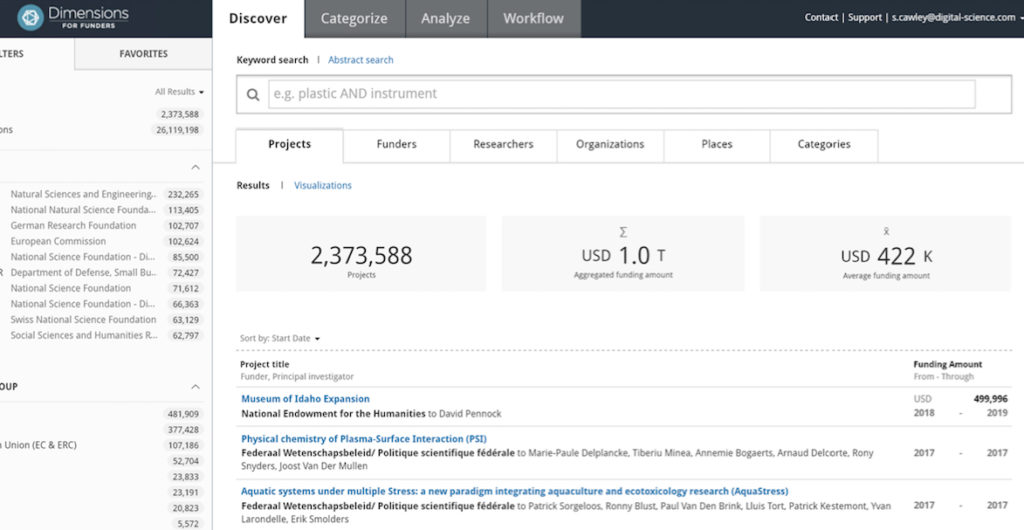

Our team at UberResearch is working to test RCR with representatives and ambassadors from a group of our partner programs. These programs include publishers, research funders, and academic research institutions. While no one metric is perfect, there is general agreement that RCR is a big leap forward in comparing publication’s citation rates across disciplines. Based on our results, Digital Science is building RCR into many of the our respective products. RCR values already appear in the ReadCube viewer – touching over 15M unique users a year – and have been rolled out across the Dimensions database – with over 25M publication abstracts and over $1T in historical funding awards.

The workflow advantages of having a common value for comparing publications are enormous.

It is worth noting that the RCR continues to be developed and improved. The team at NIH are openly soliciting ideas and feedback to make further improvements to the calculation and approach, and the Digital Science bibliometrics team are engaging with the innovative ideas that the RCR has brought to the metrics world.

Since the Digital Science announcement of adopting RCR, we have seen multiple publishers, funders, and academic partners begin to weave RCR into their own evaluative processes and workflows. While every approach to computing metrics has its pros and cons, what really differentiates RCR is the combination of significant improvement in approach, cross-disciplinary application, and rapid market adoption.

As I started this post – There is no perfect metric… However, the RCR has made a leap forward in a positive direction. With consistent improvement over current approaches, increased article-level measurement, wide market adoption, and the support of NIH, we believe RCR is poised to be an increasingly leading indicator in the research metrics landscape.