Digital Science reflections on the House of Commons Science, Innovation and Technology Committee report on Reproducibility and Research Integrity.

The new Reproducibility and Research Integrity report released by The House of Commons Science, Innovation and Technology Committee is a timely reminder that Digital Science plays a critical role in supporting research integrity and reproducibility across the sector globally. The following is our response to the report’s findings.

TL;DR: What does the report say?

The report says that publishers, funders, research organisations and researchers all have a role to play in improving research integrity in the UK, with the focus on reproducibility and transparency. It recommends that funding organisations should take these factors into account when awarding grants and that the Research Excellence Framework (REF), a method for assessing how to share around £2 billion of funding among UK universities based on the quality of their research output, should score transparent research more highly. Publishers are advised to mandate sharing of research data and materials (e.g. code) as a condition of publication and to increase publication of registered reports (published research methodologies that can be peer reviewed before a study is run) and confirmatory studies. Research organisations such as universities must ensure that their researchers have the space, freedom and support required to design and carry out robust research, including mandatory training in open and rigorous research practices.

What’s new about the report?

Having been in the open science movement for many years, the report covers familiar ground for observers at Digital Science. The report makes some expected statements such as that reproducible research should come with a data management plan, have clearly documented reproducible methods, and link to openly available code and data. These have been recognised as good practices for some time, but this report goes further to suggest that these practices should be basic expectations from all scientific research, right now.

The report also goes much further than we’ve seen before in stating that the extent to which best practice is not being followed today is the fault of the system, and not the individual researchers. Systemic barriers to best practice are identified as:

- Incentivising novel research over the reproduction of research

- Funding the ‘fast’ science over more time-consuming reproducible research

- Overemphasis on traditional research metrics – volume and citations centred around papers and people – at the expense of recognising all roles in research (such as statisticians, data scientists, and research programmers)

- Journal policies and practices that do not enforce high expectations of research reproducibility on submission

- Journal policies which do not respond quickly enough when publications should be retracted

- Lack of expertise on reproducibility and statistical methods in peer review

- Lack of institutional research integrity and reproducibility training across all levels of research seniority.

To remove these barriers, the report offers 28 observations and recommendations. At their core is the sentiment:

“…the research community, including research institutions and publishers, should work alongside individuals to create an environment where research integrity and reproducibility are championed.”

If the report has a limitation, it is that it leaves the role of some parts of the research community (including research infrastructure and service providers) unexamined. As part of the research community then, it is timely to reflect on Digital Science’s role in supporting research integrity and reproducibility.

What do we believe?

Digital Science’s long-held position is that research should be as open as possible and as closed as necessary for the research to be done within ethical and practical bounds; that research should be carried out as reproducibly and infused with integrity. Through investment in and work on tools such as Figshare and Dimensions Research Integrity, Digital Science supports researchers, research institutions, funders, governments and publishers to engage with these important topics.

Figshare

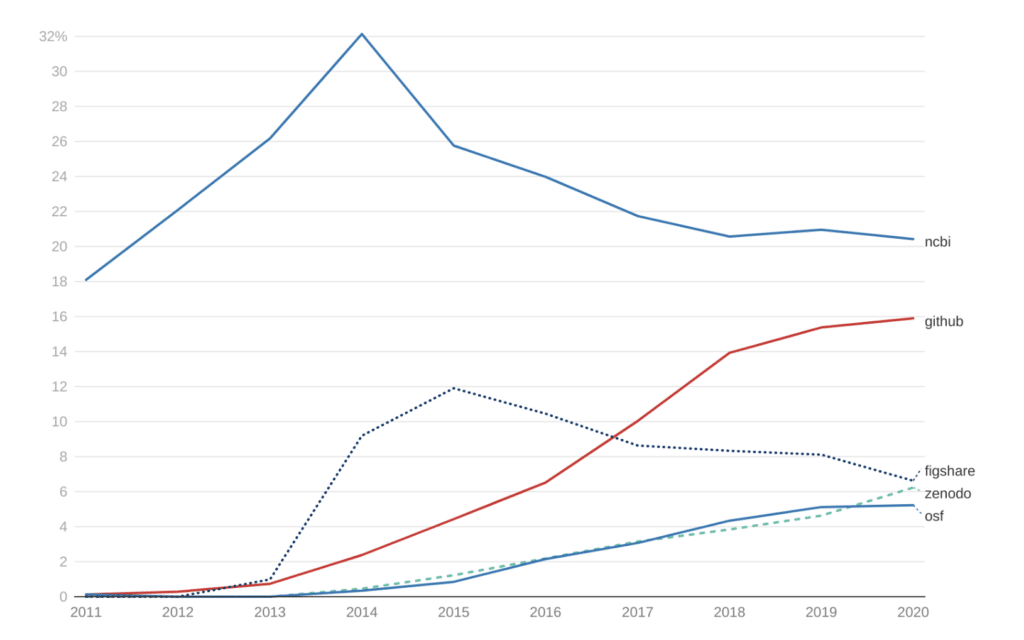

First to most people’s mind would be the role that Figshare plays in enabling transparency and reproducibility by making it possible for any researcher anywhere to deposit and share research data with a DOI. Launched in its current form in 2012, Figshare quickly became the dominant generalist repository (based on percentage share of repository links in data availability statements.) By 2020 Zenodo and OSF had joined Figshare in providing the global infrastructure for data. As institutions move to take responsibility for their own research data, Figshare has also been instrumental in helping institutions to manage their own research data repositories.

Dimensions Research Integrity

The above analysis showing the growth of data repositories is possible thanks to the Dimensions Research Integrity Dataset, a new data set measuring research integrity practices that is the first of its kind in terms of both size and scope. Designed with research institutions, funders, and publishers in mind, Dimensions Research Integrity measures the changing practice in the communication of Trust Markers: ethics approvals, author contribution statements, data and code availability, conflicts of interest, and funding statements. Trust Markers allow a reader to ascertain whether a piece of research contains the hallmarks of ethical research practice. To our knowledge, Dimensions Research Integrity is the first available global dataset on Research Integrity practice .

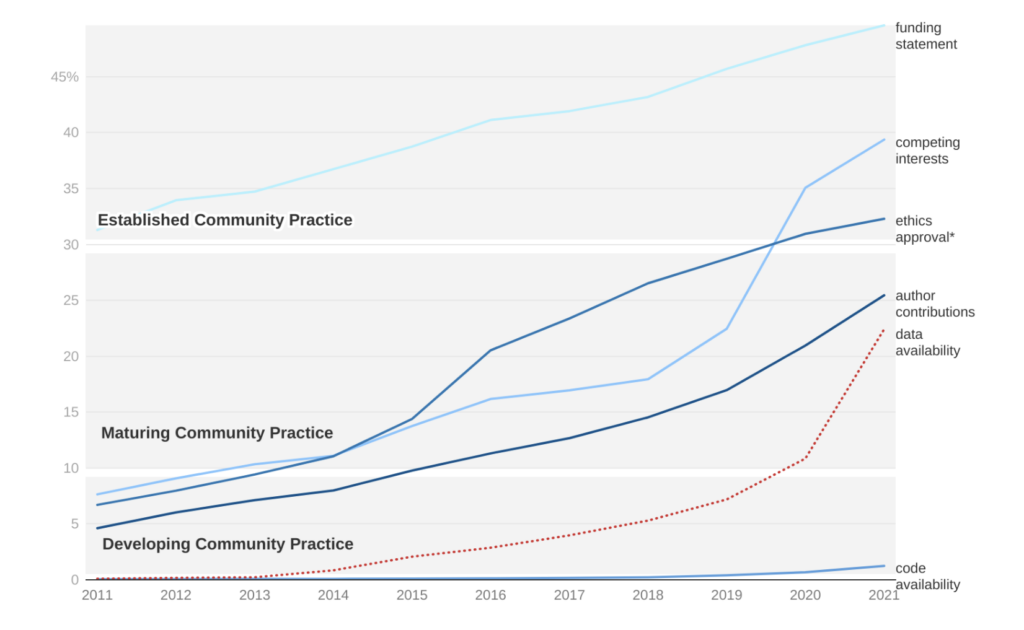

So what does the data look like for Reproducibility and Research Integrity? Taken as a global aggregate, the picture is perhaps less gloomy than the report on reproducibility and research integrity paints. Over the last three years, there has been a significant shift in structuring of research papers to make them more transparent. Although some of these changes might be cosmetic (for instance, having a data availability statement does not mean that your data is available,) they do represent a change in the research system towards research transparency. That these trust markers exist on papers at all at significant levels is down to a combination of funder policies, changing journal guidance to authors, as well as research integrity training at the institutional level.

*The percentage of ethics papers is calculated over publications with a mesh classification of Humans or Animals. The ethics trust marker looks at those papers that include a specific ethics section (as opposed to mentioning ethics approval somewhere in the text).

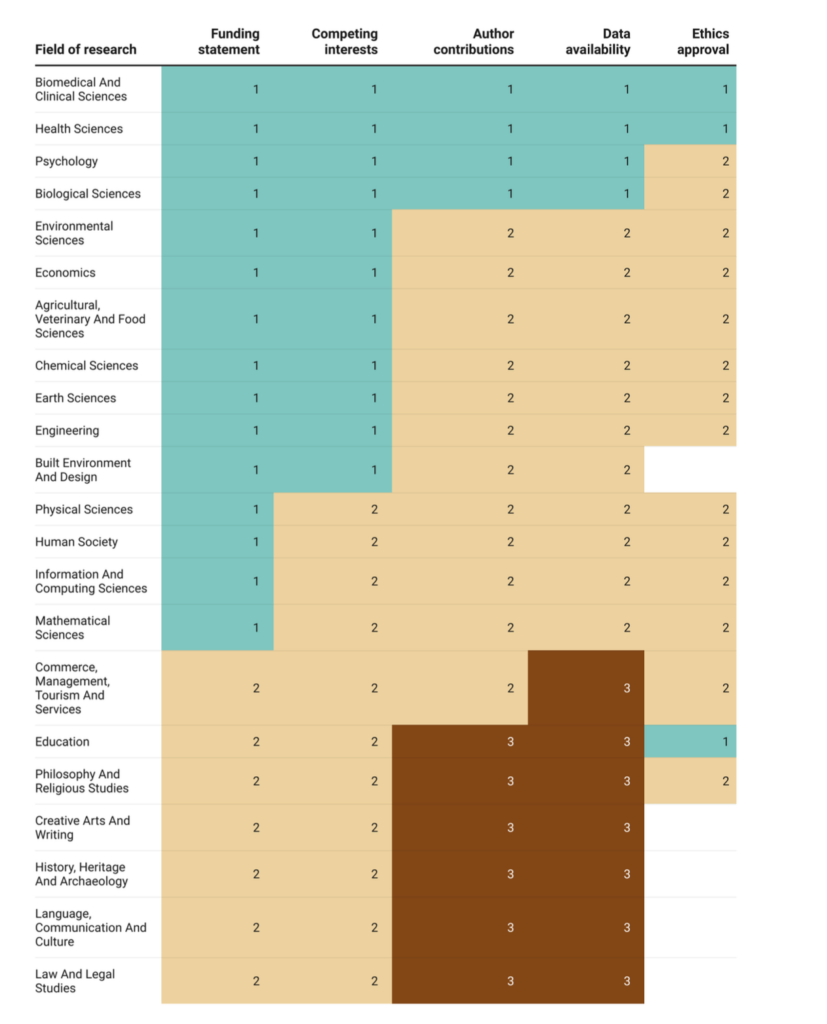

As the report on reproducibility and integrity indicates, efforts to improve transparency should be addressed across all disciplines. By looking at the data for 2021, we can get a sense at how exposed each discipline is to each of the different trust markers. Where an area has reached at least 30% coverage, it is asserted that a tipping point has been reached, and that there are few impediments towards pushing towards more complete adoption with goals set around compliance. Areas of maturing community practice between 10% and 30% and developing community practice (less than 10%) require a different sort of engagement focus on education. This level of data is crucial both for being able to adapt Research Integrity training by discipline, but also for setting benchmarks, and measuring how successful different research integrity interventions have been.

As examples of how Dimensions Research Integrity can be used, these brief analyses are only the beginning of a broader evidence-based discussion on research integrity and reproducibility. More information on research integrity can be found in our Dimensions Research Integrity Whitepaper.

We look forward to having many more conversations with you throughout the year on Research Integrity!