Will mastery of the witchcraft of AI be good or bad for research?

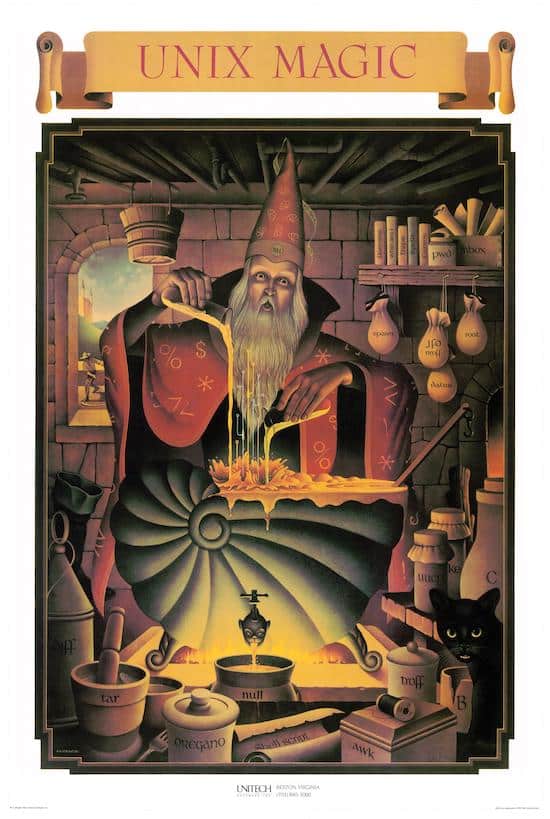

Until six months ago most of us probably hadn’t placed the words “prompt” and “engineer” in close proximity (except possibly for anyone involved in a construction project where a colleague had arrived to work consistently on time). Today, a “prompt engineer” is one of a new class of emerging jobs in a Large Language Model (LLM)-fueled world. Paid in the “telephone-number”-salary region, a prompt engineer is a modern day programmer-cum-wizard who understands how to make an AI do their bidding.

The dark art of LLMs

Getting an AI to produce what you want is something of a dark art: as a user you must learn to write “a prompt”. A prompt is a form of words that translates that which you desire into something the AI can understand. Because a prompt takes the form of a human-understandable command, such as “write me a poem” or “tell me how nuclear energy works”, it appears accessible. However, as anyone who has played with ChatGPT or another AI technology will tell you, the results, while amazing, are often not quite what you asked for. And, this is where the dark art comes in. The prompts that we use to access ChatGPT-like interfaces lack the precision of a programming language, but give a deft user access to a whole range of tools that have the potential to significantly expand our cognitive reach and, because of the natural language element of the interface, have the potential to fit more neatly into our daily lives and workflows than a programming interface.

the incantation or spell that you need to cast is not obvious unless you can get inside the mind of the AI…

Mastering these new tools, just as was the case when computer programming became the tool of the 1970s, requires a whole new way of thinking – the incantation or spell that you need to cast is not obvious unless you can get inside the mind of the AI. Indeed, this strange new world is one in which words have a new power that they didn’t have just a few months ago. A well-crafted prompt can conjure sonnets and stories, artworks and answers to questions across many fields. With new plug-in functionalities, companies are able to build on the LLM frameworks and extend this toolset even further.

Problematic patterns

What can seem like magic is actually an application of statistics – at their hearts Large Language Models (LLMs) have two central qualities: i) the ability to take a question and work out what patterns need to be matched to answer the question from a vast sea of data; ii) the ability to take a vast sea of data and “reverse” the pattern-matching process to become a pattern creation process. Both of these qualities are statistical in nature, which means that there is a certain chance the engine will not understand your question in the right way, and there is another separate probability that the response it returns is fictitious (an effect commonly referred to as “hallucination”).

Hallucination is one of a number of unintended consequences of AI – whereas a good search on Google or a Wikipedia article brings you into contact with material that may be relevant to what you want to know, it is most likely that you will have to read a whole piece of writing to understand the context of your query and, as a result, you will read, at least somewhat, around the subject. However, in the case of a specific ChatGPT prompt, it is possible that you only bring back confirmatory information or at the very least information that has been synthesized in specific response to your question, often without context.

Another issue is that of racism or other biases. AIs and LLMs are statistical windows on the data they contain. Thus, if the training data are biased then the outcome will be biased. Unfortunately, while many are working on the high-level biases in AI and also trying to understand how one might equip AIs with an analog of common sense, it is not yet mainstream research. More generally, I am quite hopeful that, since the issue of bias is becoming better articulated with time, we will conquer obvious biases that take place in interactions. However, what is more concerning to me are the cumulative effects of subtle biases that AIs adopt. The whole point of AIs as tools is that one thing that they do better than humans is that they are able to perceive factual information more completely and with greater depth than a human. This is why we can no longer beat AIs at Chess or Go (except by discovering critical flaws!), and why we may not be able to perceive biases that are not simple. In “2001”, the famous Stanley Kubrick Arthur C Clarke collaboration, the AI HAL interpreted conflicting instructions in an unpredictable way. In fact, the rationale for HAL’s psychosis is obvious by modern standards, but it serves as a prescient tale – the outcome of a confluence of a large number of complex instructions could be a lot more challenging to identify.

Fear and loathing in AI

Twenty years ago, we would not have recognised “social media influencer” as a job type and in twenty years from now, we may not recognise taxi driver as a job type. Prompt engineers may form just one new class of jobs, but how many of us will be prompt engineers in the future? Will prompt engineers be the social media superstars of tomorrow? Will they be the programmers that fuel future tools? Or, like using a word processor or search engine, will we all be required to learn some level of witchcraft?

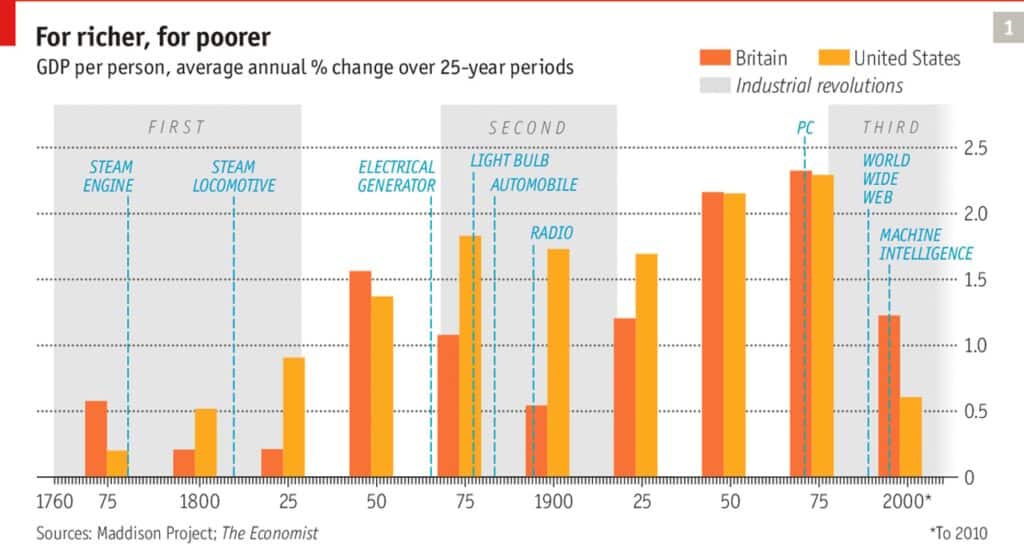

Previous industrial revolutions have been responsible for massive wealth creation as sections of society have significantly increased their productivity. But, at the same time, revolutions have also led to destitution in the parts of society that have either lacked access to new technologies or who have lacked the capacity to adapt. As can be seen in Figure 1, following the first industrial revolution in the UK the average GDP per person fell between the 1760s and the 1820s – it was not until the 1830s (50 years after the introduction of the steam engine and a full 80 years after the beginning of the industrialization) that the GDP per person regained and eventually eclipsed its level in 1750. The same trend took place in the late 1860s where GDP per person fell until the early 1900s when, by 1925, it was well on the way to its previous high, again to eclipse its prior peak by 1950. We are currently in a third cycle in which GDP per person is now lower than its 1975 high, initiated by the advent of the PC, followed by the World Wide Web and further fuelled by the rise of AI.

Viewed as a continuum since the dawn of the information age with the widespread introduction of the PC, we stand in the midst of an exponential revolution where the technologies we have built are immediately instrumental in designing and building their successors, superseding themselves over shorter and shorter timescales.

Moore’s Law may have held, predicting the growth in potential of computer chips, through the end of the last millennium. While this power has determined the power and capability of AI up until this point, data are now the dominant factor in how the power of AI will develop. And, as data limitations come into place, the power of quantum computing may take us further into the future.

If we view AI as the tool that it is, and think about how it can complement our work, we begin to position ourselves for the new world that is emerging.

Despite Elon Musk’s prophecies of doom and even the training of evil AIs, or those which create deadly toxins, most people just want to know if their livelihoods are under threat in the near future. While world domination by an AI is not likely in our imminent future, it is almost a certainty that jobs will change. However, if we view AI as the tool that it is, and think about how it can complement our work, we begin to position ourselves for the new world that is emerging. Progressive researchers and businesses alike will be investing not just in learning how the current technology will revolutionize our world but in continual professional development for their team members as, in an exponential revolution, we can expect to need to retrain not just one or twice in a career, but potentially all the time.

While Web 2.0 in the early 2000s gave us massive open online courses (MOOCs) with the potential to retrain and develop ourselves, it has developed as a tool for companies to deliver information such as safety and compliance training, as well as a tool for us to learn new hobbies. Importantly, it has broadened access to education by, for example, making college and university-level courses available for free or at low cost. However, for the majority of people, MOOCs and learning platforms have not been integrated into our lives on a regular basis. In an AI-fueled revolution, our capacity to learn may become critical in being employable – continuous professional development may be the new norm, with your education even being tailored to your needs by AI.

Who prompts the prompters?

ChatGPT and similar technologies show great promise in a research context. They have the capacity to extend our perception, detect subtle patterns, and enhance our ability to consume material. It is clear that their existing ability to summarize and contextualize existing work is impressive. Several authors have already attempted to add ChatGPT as a co-author, causing some publishers to bring out guidelines that ChatGPT may not be a co-author, since it cannot take responsibility for its contribution. At the same time there are concerns over ChatGPT’s tendency to “hallucinate” answers to questions. Since LLMs are essentially statistical in nature – their answers correspond to the statistically most likely responses to questions – which means that their answers are only statistically based on fact and there is always a chance of untruth. This means that a literature review written by an AI may be written quickly but it could, at some level, be both uninnovative and incorrect since it is the most statistically likely statements that others might make about the subject.

Such approaches can already be observed as publishers seek to address an onslaught of papers from papermills

This leads to two obvious challenges (and many more besides): One is the bias of research to particular points of view that are reinforced by repetition in the literature; the second, is that AIs are clearly already capable of producing papers that look sufficiently “real” that they may pass peer review. Depending on who is prompting the AI, such an AI-written paper may be designed to contain fake news, factually questionable information, or otherwise damage the scholarly record. Such approaches can already be observed as publishers seek to address an onslaught of papers from papermills.

On the one hand, LLM technology may be able to perceive patterns beyond the capability of humans and hence point to things that humans have not thought of, yet on the other hand, LLMs also don’t generate true understanding-based intellectual insight, although it can seem to be otherwise. This leaves AIs at an uncomfortable intersection of capabilities until they are truly understood by their users.

But, as Harari argues, the effect of AI tools on how we interact with each other and how we think has the potential to be profound. In Harari’s example, it is narrative storytelling that is the critical capability that can undo society. In research, we need to preserve young researchers’ ability to formulate narrative arcs to describe and relate their research to other researchers – while machine-readable research has been a long-held aim of those of us in the infrastructure community, human-readability must not go away. Asking an AI to write a summary or narrative for you using the current technology is a way to potentially miss key patterns, key results and realisations. For a mathematician this is analogous to using a symbolic mathematics program to perform algebraic manipulations rather than having a sense of the calculation oneself. If one lacks calculational facility then it is impossible to know if the result really is true or whether the machine has made an assumption that is inappropriate.In spite of these challenges, AI tools, used as a set of intellectual augmentation capabilities, hold intriguing possibilities for researchers. AIs already play significant roles in the solution of certain types of differential equations, in calculating optimal geometries for plasma fusion and detecting cancer – essentially anything where human pattern matching is not subtle enough or where one has to carry too many variables in a single mind.

Is the real gain to come from AIs being prompt engineers for us?

We started this blog by considering humans writing as prompt engineers for AIs – but is the real gain to come from AIs being prompt engineers for us? When we stare at a blank page trying to write an article, an AI does an excellent job of writing something for us to react to. Most of us are better editors than we are authors so is one possible future one in which the AI helps with our first draft? Or, does it challenge our thinking if we appear to be the ones who are biased? Is AI the tool that ensures that our logical argument hangs together? Or, is it the tool that takes our badly written work and turns it into something that can be read by someone with a college-level education?

When writing a recent talk, I used ChatGPT as a sounding board – “Would you consider the current industrial revolution to be the third or fourth?” I asked. And then, “It is generally agreed that the phase the exponential revolution is synonymous with the fourth industrial revolution?” Later I prompted, “Please make suggestions for an image that might be used to represent the concept of community.” The responses were helpful, as though I had an office colleague to bounce ideas off. Not all of them were accurate but they gave me a starting point to jump off into other searches on other platforms or other books that I had available to me.

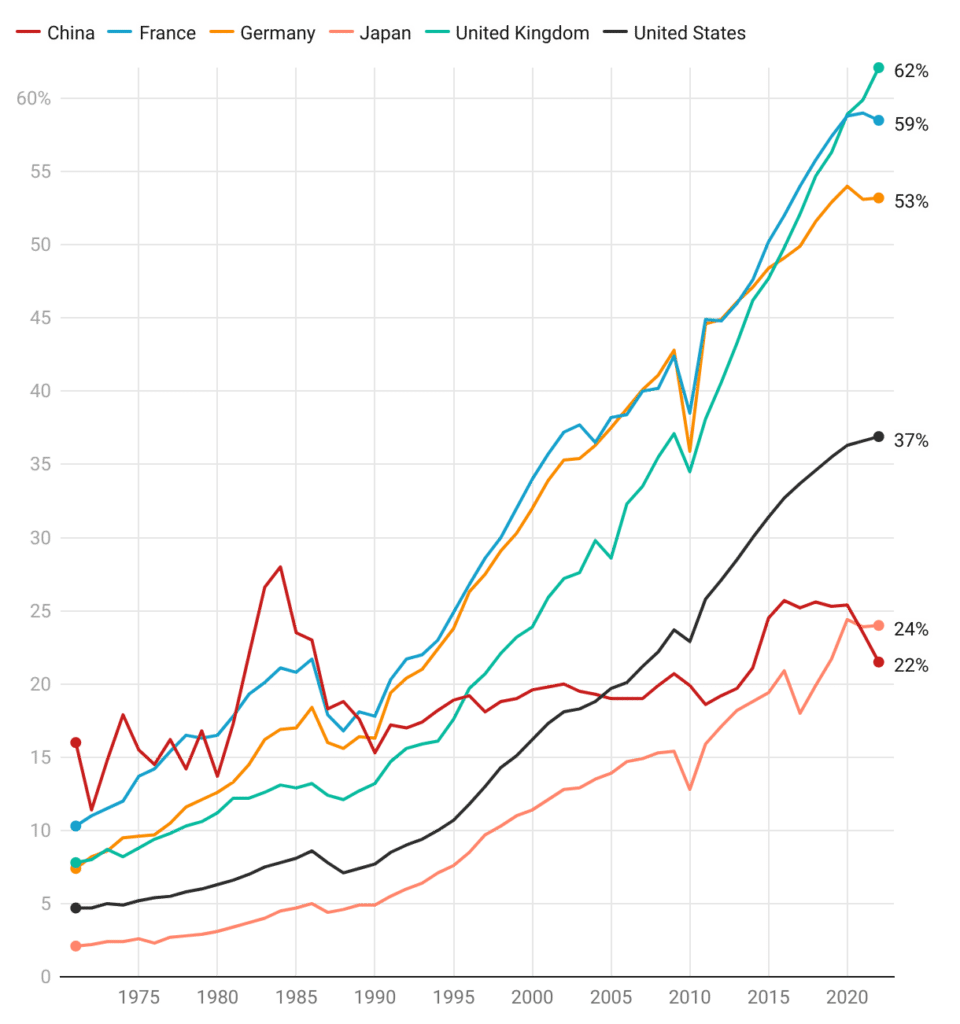

One, perhaps scary, notion is that an AI may herald a reversal of the recent trend towards team research and back toward the days of the lone researcher. Over the last half century the world has been moving forward with an increasingly collaborative research agenda (see Fig. 2) – collaboration has become critical in many areas. This need for collaboration often has its genesis in needing resources such as equipment that is too costly for a single institution or single country to hold. But, collaborations often also come from wanting to work with colleagues with particular skills or perspectives. In this latter case, LLMs can be a helpful tool. I have written before that it is a conceit to believe that you can be the best writer, idea generator, experimentalist, data analyst and interpreter of data for your research. But, with LLMs, you may only need to be capable of just a few of these in order to work once again alone.

Regardless of the actual future of AI and its role in research, it is clear that we are living in a world where AIs will take a role in our lives. Arthur C Clarke once commented that “Any sufficiently advanced technology is indistinguishable from magic” and that is certainly how the outputs of LLMs appear to many of us, even those of us in the technology industry are impressed. But, there is another side to witchcraft. In John Le Carré’s Tinker, Tailor, Soldier, Spy – Operation Witchcraft takes over the Circus (the centre of the British intelligence service), leaving them completely vulnerable to false information from their enemies. In what is coming, it seems that we have no choice but to master witchcraft.

Daniel Hook is CEO of Digital Science, co-founder of Symplectic, a research information management provider, and of the Research on Research Institute (RoRI). A theoretical physicist by training, he continues to do research in his spare time, with visiting positions at Imperial College London and Washington University in St Louis.