This is the story of how a school science fair inspired a passion for science communication, a PhD in microbiology, and a valuable perspective on the current AI debate.

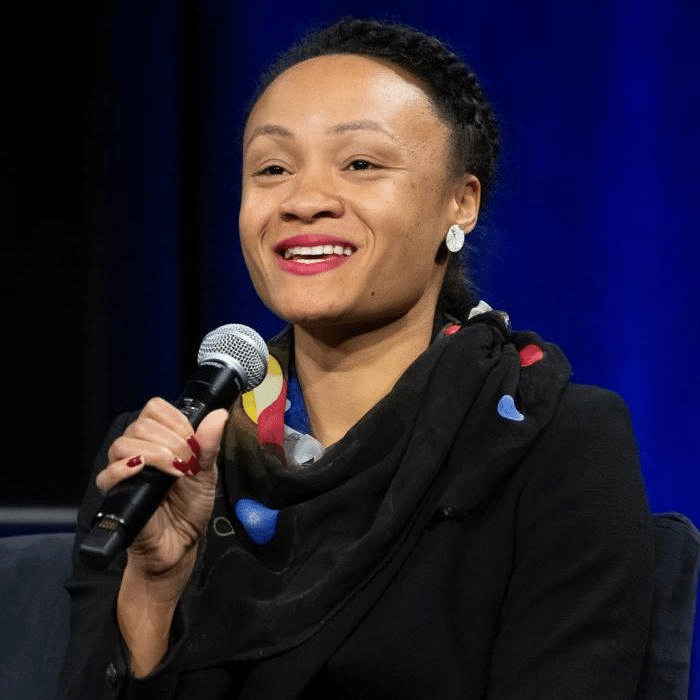

Dr Jessica Miles recently participated in the SSP2023 panel on AI and the Integrity of Scholarly Publishing, the writeup from which has just been published on the Scholarly Kitchen.

I caught up with Jessica to chat about how she came to be on the panel, her background in microbiology, and her thoughts on the future of scholarly publishing in an AI world. I got a Barack Obama impression for free 😊

Quick links

From school science fair to SSP2023

John Hammersley: Tell us how you came to be on the SSP2023 panel on AI

Jessica Miles:

It seems so surprising, right? (JH: Not at all!!) I asked myself that same question the other day — I was at the SpringerNature office and I met somebody who works on submissions who I’d connected with on LinkedIn. He stopped me and said: “I looked at your LinkedIn, your career, and it seems …he didn’t say bizarre, but went with ‘very interesting!’…and I’d love to chat about it.”

And it made me think — in respect to the SSP AI debate and my participation – that one of the reasons I was invited is precisely because I don’t have the normal profile of someone that you might expect to be participating in an AI debate. But if that’s the case, what’s interesting enough about my career that makes my perspective on this noteworthy? And the conclusion I came to is that, beyond my experience in scholarly publishing, it’s quite a bit to do with my experience with (and enthusiasm for!) science communication. I’ve been interested in science communication for a long time – when I went to school, the university I picked specifically had a program in science communication.

From an early age I learned about Michael Faraday (and his role as a science communicator) and I thought that would be something really cool to do, even though I didn’t know what that was at first! I thought I maybe wanted to do science journalism, eventually landed into research and so when I think about a lot of the different things that I’ve been interested in or done, they really fit kind of nicely within that space of science communication that I’ve been following for a long time.

So getting back to the SSP debate, if you think about the audience being in the scholarly technology space, not an audience of experts on AI, this idea of communicating science in a different way but with this sort of publishing lens comes to mind. And so I think that’s why I was really excited to get the opportunity to do this and why I think it makes sense because it’s not like I’m giving a talk at Google or to a group of AI experts, it’s really about communicating science, but at the intersection of something else, something new.

What does Mary Shelley’s Frankenstein have to do with AI?

John: Yes, I agree it’s really important that different perspectives are included (in these discussions), and that we don’t reduce AI to just being about the technology because it’s totally not just that! Chat GPT’s explosion into the public consciousness is a great example — it wasn’t so much the technology but the interaction with it that caught the imagination. So I think it’s good when forums do try to include lots of different viewpoints. But I also know what you mean in terms of not feeling qualified, because your background isn’t in the technology side of it.

Jessica:

Exactly — my PhD is in microbiology, not machine learning, and I certainly wouldn’t call myself an AI expert. So, yeah, it’s a natural question people asked of me (after the debate). And that’s the answer that I came up with after some reflection! I think having that perspective informs the way I’m thinking about these technologies. For example, I think about one of the courses that I took where we read Mary Shelley’s Frankenstein. The text of Frankenstein is not necessarily considered particularly difficult and some might ask, “Why is this a college level text?” But one of the main ideas of her work is that she’s interrogating how society is thinking about new technologies — it’s a commentary on science, and I was thinking about that recently because it feels like we’re in another similar moment where we’re having to grapple with this new technology and some people are quite fearful and some people are quite excited.

And some people worry that it will take away the mystery of the world if we can recapitulate human thought, human intelligence.

Where does that leave us from a philosophical perspective? We’re starting (or continuing) to ask if anything is sacred anymore? There are a lot of really interesting questions, and having a historical perspective feels like a nice way to approach this moment so that it’s not so overwhelming. It’s like “okay there are historical parallels, and yes this might buck those trends but at least you have some kind of grounding to approach all of this.”

We spent a lot of time on Frankenstein — we must have spent several months on it — and it’s not necessarily something you would think would require that level of rigor. But when you consider it from the context of society and all the other things that were happening around that time — industrial revolution, and the rapid pace of change that brought — there is very much an allegory meaning behind it that belies its reputation as a simple text.

Generative AI – the new normal

John: Frankenstein is a nice example of the fear a new technology can generate. Yet, we’re very good at adapting to the new normal as new things are developed – stuff that would have been seen as magical and amazing previously is then seen as expected, trivial commonplace, once it’s been (repeatedly) demonstrated.

Generative AI – for text, images, and more – is quickly becoming the new normal. So I’m curious, because I didn’t go to SSP this year and I haven’t had a chance to look over the sessions – what did you find most interesting at SSP, either from the sessions or just from the chats you had whilst you were there?

Jessica:

One topic that particularly resonated was that of the scholarly intellectual output of humans — that the ideas aren’t machine generated, and that manifests itself in terms of the written text. So you can imagine, especially in humanities, there’s a very heavy focus on making sure that the text isn’t machine generated, or at least that the ideas and the text are very much those of a human being.

On the science side however, it seemed to be not so much a concern that the text itself isn’t from humans, but what was seen as more worrying was that there’s even more active fraud with respect to data outputs. The opening plenary was from Elisabeth Bik, whom you might know from her work in ensuring scientific integrity and thoroughly investigating image manipulation.

John: Yes, Elisabeth Bik is a legend – I don’t know how she finds the energy in the face of ever more papers!

Jessica:

She talked about image manipulation and her efforts as a whole, and then focused on the potential implications for these new technologies – not so much on the text side, but on the image side with respect to making synthetic outputs. From her talk, it sounds like we’re in a challenging moment because although the general consensus was that we’re a little bit too early on with those technologies to really see the impact, everyone feels like there’s a brewing storm in terms of all the people who have had time to use these tools, and learn these tools – that we will see nefarious actors (e.g. paper mills) start to incorporate them in ways that we haven’t seen before. And that we’re ill-equipped as a publishing community to deal with it.

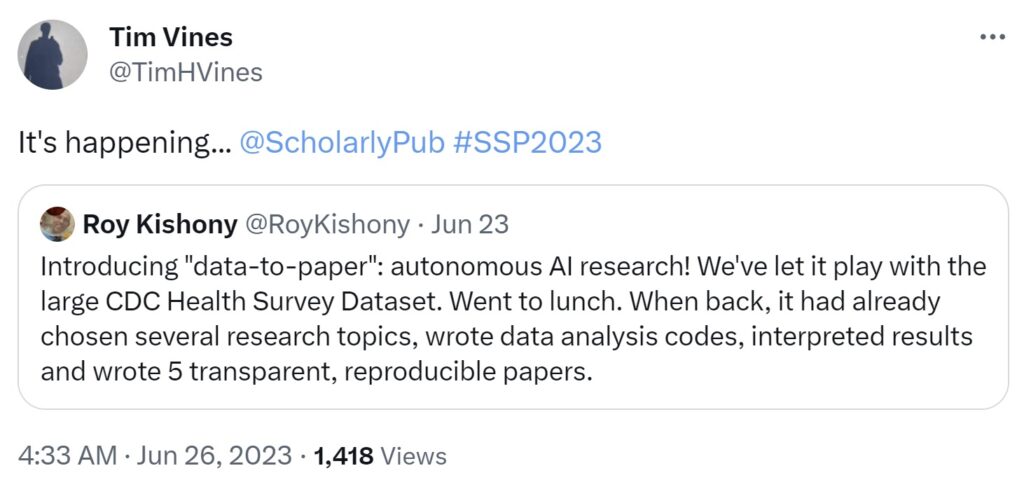

John: It’s interesting you mention that. Tim Vines shared a tweet today…

Jessica: Tim was my debate partner!

John: It’s a small world indeed! I know Tim from when he founded Axios Review, back when Overleaf was also a new kid on the block 😊 He shared this tweet and it really highlighted how close we are to researchers being able to use AI to generate plausible scientific papers.

Jessica:

This feels almost fully autonomous, not fully, but with a sort of minimal intervention. And obviously I haven’t looked at this in great detail, but it’s a huge step forward from AI “simply” helping to fine-tune something a researcher has written, like we’ve had before with grammar tools.

Barack Obama?

John: Exactly. It feels like we’re almost at the point where a researcher can ask the AI to write the paper, like e.g. a CEO could say to Chat GPT “please write five paragraphs on explaining why the company retreat has to be canceled this year and make it apologetic and sound like Barack Obama.”

Jessica: (in a deep voice) Folks, the company retreat…

John: …

Jessica: I’ve just been watching his new documentary on Netflix so I have his voice in my mind…

John: Okay.

Jessica: It’s actually quite good — he interviews people who have different roles at the same companies and internally tries to get at “what is working, what is a good job”? It’s very US focused but I thought it was quite interesting. Anyway, pivot, but we can go back to AI!

John: This is a nice aside! I saw a video excerpt of him speaking recently, where he was asked for the most important career advice for young people and I believe he said “Just learn how to get stuff done.” Because there are lots of people that can always explain problems, who can talk and talk about stuff, but if you’re the one that can put your hand up and say “That’s all right, I’ll sort that, I can handle that”, it can get you a long way.

What is the publishing industry’s moat?

John: But yes, back to AI, some of the new generative image stuff is a bit crazy – being able to use it to expand on an existing image, rather than just generate something standalone, suddenly makes it useful for a whole load of new things. And I see Google has now also released a music generator, which generates music for you based on your prompt. Everything seems to be happening faster, at a bigger scale than before, and I can see why scholarly publishing is trying to figure out how to ride this tsunami…

Jessica:

How are we going to keep up? Yeah…to borrow a phrase, “What is our moat?”

I think a lot of people are thinking about that, especially given that there’s not only Chat GPT but also all of the smaller models that proficient people can now train on their own. As a scholarly publisher, you’re serving a population that has an over-representation of people—your core demographic—who are going to be really fluent in these models, so what can you offer to them? What can you offer this group that they can’t kind of already do on their own? That’s the million dollar question.

John: Publishers would say they try to help with trust in science, and research integrity – for example, through peer review and editorial best-practices. But they also have an image problem, because there is also a tendency to chase volume, because volume generally equals more revenue, and if you’re chasing volume then you’re going to accept some papers that prove to be controversial. It’s an interesting dynamic between volume, trust and quality.

Jessica:

The volume question is always really interesting because there’s that perspective where people assume publishers have these commercial incentives to grow volume, which has some validity in an OA context of course, but there’s the other viewpoint which is that science is opening up and becoming more inclusive) and that almost by definition means publishing more papers from more authors. But restricting what people can publish…should that be up to the publishers? I do think there is a sense that publishers don’t want to be in the business of telling people they can or can’t publish. Because it’s one thing to say at a journal level, “we don’t think this paper meets the editorial bar”, it’s quite another thing to say, “we don’t think this paper ought to be published anywhere, ever”, right? In fact, I think many publishers would say the opposite: “any sound paper ought to be published somewhere.” We see this view borne out by the increasing investments publishers are making to support authors even before they submit to a journal and also to find another journal, if a paper isn’t accepted.

Another consideration that was also mentioned at the conference is that writing papers is the way that scientists communicate with each other. Dr. Bik was saying that hundreds of thousands (I forget the exact number) of papers were published on COVID research in the last three years. And she said, “Why do we need that many papers?” Well, in retrospect it’s very easy to say that, but if you are an editor working during COVID, as I once was, for which paper are you going to say “we don’t actually need this one”? Everything was happening so fast, you didn’t know what papers would be the crucial ones in the long run – how can you make that judgment? So I do think more gets published, in response to perceived need.

To be clear: you’re not going to publish anything you don’t think is scientifically sound, but most of us are not going to try to set the bar at “will this be valuable three or five years later”? That is quite a different bar than “is this just methodologically sound”?

And I don’t know if we’ve gone too far from the question on AI, but with higher volume comes this need to curate–which we’ve needed for a long time–and as the volume increases, the need to curate increases. This curation is another value-add for publishers, but also again something that AI can potentially be very good at given reasonable inputs. I want to be careful not to set up a false dichotomy of “publisher-curation” versus “AI-curation”, because publishers are very much already using AI for things like summarization and recommendation engines, but there is the question of whether publishers, moving forward, continue to drive this curation.

John: One reason you write papers as early career researchers is because you’re learning how to write a paper — you’re publishing some results that aren’t necessarily all that ground-breaking but it’s a record of what you’ve done, why you say your methodology is sound, and so forth. And in doing so you learn how to write a paper. It raises the question of how much e.g. undergraduate work should be published to give say third and fourth year students the opportunity to go through the process of getting their work published.

Jessica:

It is a fascinating question because not only is the pedagogy piece real–that early trainees, undergrads, etc need to learn how to write–but also because publishing is about putting your ideas out there and becoming known to the community.

You can present at conferences (which is another skill), but the scale of impact from that is typically much smaller, and typically it can be difficult to get to the point where you would present at a conference without having published. If you aren’t able to publish, then you’re not able to establish yourself within the community. So publishing is critical for early career researchers to get that first step on the ladder.

The value of getting things done

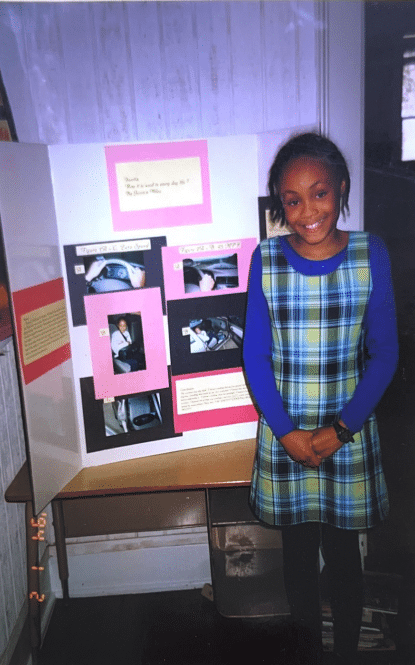

John: That brings us back almost full circle to where we started – you talked about Michael Faraday as a science communicator, and that you were into science as a kid…

Jessica: I definitely was — I did science fairs at school and that’s actually how I learned about Michael Faraday. And I hadn’t really thought about the communication of science as being distinct from the research itself but for some reason the communication aspect specifically really appealed to me. I have always been quite curious and into learning and storytelling, and being able to communicate ideas through stories.

John: As we’re nearly at time, perhaps that’s a good point to end on — the value of science fairs, and the value of doing something for yourself and getting that experience is one thing AI is not going to replace; it might be able to create the poster for you, or it might write a paper for you, but if you’re the one that’s got to stand there and say what you’ve done, it’s usually pretty obvious if you know what you’re talking about or not, and there’s no AI substitute for that.

Jessica: Yet!

Jessica Miles holds a doctorate in Microbiology from Yale University and now leads strategic planning at Holtzbrinck Publishing Group. I (John Hammersley) am one of the co-founders of Overleaf and now lead community engagement at Digital Science.