In this blog series, we will explore the Fragmentation of Artificial Intelligence research. This first post lays out some of the key areas where AI research and development have become disconnected, making it more difficult to advance the field in a coordinated, ethical, and globally beneficial manner.

Artificial Intelligence (AI) is a recent discipline, having started in the 1960s, which aims at mimicking the cognitive abilities of humans. After going through a few “winters of AI” in the 70s and 90s, the field has been experiencing a boom since the 2010s thanks to increased computing capacities and large data availability.

The interdisciplinary foundations of AI draw from diverse fields across the sciences, technology, engineering, mathematics, and humanities. Core STEM disciplines like mathematics, computer science, linguistics, psychology, neuroscience, and philosophy provide vital technical capabilities, cognitive models, and ethical perspectives. Meanwhile, non-STEM fields including ethics, law, sociology, and anthropology inform AI’s societal impacts and governance. Together, this multidisciplinary collaborative approach aspires to enable AI systems that not only perform complex tasks, but do so in a way that accounts for broader human needs and societal impacts. However, significant challenges remain in developing AI that is compatible with or directed towards human values and the public interest. Continued effort is needed to ensure AI’s development and deployment serve to benefit humanity as a whole rather than exacerbate existing biases, inequities, and risks.

Global Divides

Research is globally divided – the high income countries in particular are the biggest publisher of peer-reviewed publications and the biggest attendee group at research conferences. This is especially true in AI research, with AI researchers from poorer countries moving to hubs like Silicon Valley. This is, in part due to the lack of cyber infrastructure in many countries (GPU, electricity reliability, storage capacity, and so on), but also for countries in the non-English speaking world there may be a lack of, to data availability in their native language.

The concentration of AI research in high-income countries has multiple concerning consequences.: First, it prioritizses issues most relevant to high income countries while overlooking applications that could benefit lower income countries (e.g. iImproving access to basic needs, such as clean water and food production; diagnosis and treatment of diseases more prevalent in low-income regions). Second, the lack of diversity among AI researchers excludes valuable perspectives from underrepresented groups including non-Westerners, women, and minorities. Policies and ethics guidelines emerging from the active regions may not transfer well or align across borders.

In a third blog post of this series, we will investigate the global division of AI research, and look into the possible solutions.

Siloed knowledge

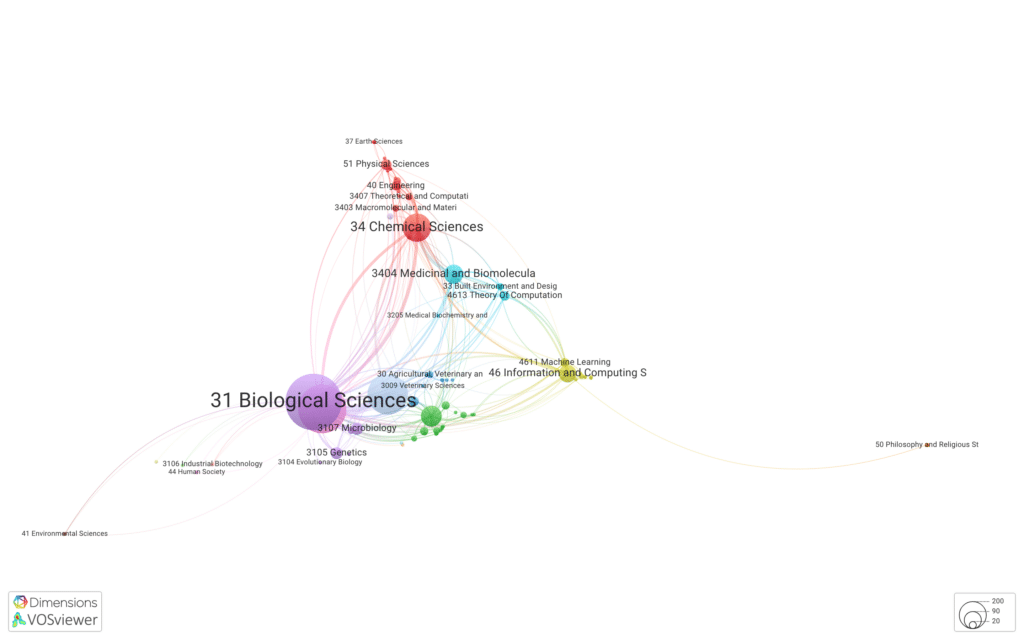

However, in recent years research in AI has become so specialised that it is difficult to see where AI starts and ends. A great example of this is the fact that many AI-related considered research publications are actually not classified as “Artificial Intelligence” in Dimensions. Take the AlphaFold publications, these are considered Bioinformatics and Computational Biology, rather than Artificial Intelligence. Many consider Machine Learning to be a subfield of Artificial Intelligence, however the Fields of Research separates both and puts them at the same level.

As AI research spreads to different fields, progress is more difficult to spread – researchers in different disciplines rarely organise conferences together, most journals are specialised into one field of research, researchers’ physical departments in universities are spread across buildings, and therefore there is less collaboration between them. Any progress such as thatprogress required to make AI more ethical, is less likely to spread evenly to every applied AI field. For instance, transparency in AI, which is still in infancy and developedhappened thanks to collaboration between ethics and AI, will take more time to reach AI applied in Physics, Chemistry, and so on.

Do the benefits of AI application in other research fields outweigh the difficulties in applying AI advancements? And how much interdisciplinary actually happens? This will be the inquiry of our second blog post of this series.

Policy framework

Globally, government policies and regulations regarding the development and use of increasingly powerful large language models (LLMs) remain fragmented. Some countries have outright banned certain LLMs, while others have taken no regulatory action, allowing unrestricted LLM progress. There is currently no international framework or agreement on AI governance; efforts like the Global Partnership on Artificial Intelligence (GPAI) aims to provide policy recommendations and best practices related to AI, which can inform the development of AI regulations and standards at the national and international levels. It tackles issues related to privacy, bias, discrimination, and transparency in AI systems; promotes ethical growth development, and encourages collaboration and information sharing.

AI policies vary widely across national governments. OIn 2022, out of 285 countries in 2022, just 62 (22.2%) countries had a national artificial intelligence strategy, seven7 (2.5%) were in progress and 209 (73.3%) had not released anything (Maslej et al. 2023). Of those countries that took a position, the US at that time focused on promoting innovation and economic competitiveness, while the EU focused on ethics and fundamental rights. On October 30th the US signed their first executive order on AI (The White House 2023), which demands the creation of standards, more testing and encourages a brain gain of skilled immigrants. At a smaller scale, city-level policies on AI are also emerging; sometimes conflicting with national policies. San Francisco, for instance, banned police from using facial recognition technology in 2019.

Ultimately, AI regulations tend to restrict AI research, which if it happened unevenly around the world would create centres of research where less regulations take place.

How does this varied policy attitude affect the prospects of AI research? Will this lead to researchers migrating to less restricted regions? Such will be questions addressed in another blog post.

Bibliography

Maslej, Nestor, Loredana Fattorini, Erik Brynjolfsson, John Etchemendy, Katrina Ligett, Terah Lyons, James Manyika, et al. 2023. ‘Artificial Intelligence Index Report 2023’. arXiv. https://doi.org/10.48550/arXiv.2310.03715.

The White House. 2023. ‘FACT SHEET: President Biden Issues Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence’. The White House. 30 October 2023. https://www.whitehouse.gov/briefing-room/statements-releases/2023/10/30/fact-sheet-president-biden-issues-executive-order-on-safe-secure-and-trustworthy-artificial-intelligence/.